What is odometry?

Odometry is the use of data from motion sensors to estimate change in position over time. Odometry is used by some robots, whether they be legged or wheeled, to estimate (not determine) their position relative to a starting location. This method is sensitive to errors due to the integration of velocity measurements over time to give position estimates. Rapid and accurate data collection, equipment calibration, and processing are required in most cases for odometry to be used effectively.

What does a robot need for odometry?

Rotary encoders are needed to measure the movements of rotary joints (e.g. wheels). Higher accuracy is achieved with higher resolution. The resolution is measured in degrees.

Linear encoders are needed to measure the movement of prismatic joints (e.g. linear actuator). Higher accuracy is achieved with higher resolution. The resolution is measured in meters.

Processing unit to read encoder data, save position data and perform basic trigonometric calculations.

Odometry in ROS

The tutorial in the link below provides an example of publishing odometry information for the navigation stack. It covers both publishing the nav_msgs/Odometry message over ROS, and a transform from a "odom" coordinate frame to a "base_link" coordinate frame over tf.

http://wiki.ros.org/navigation/Tutorials/RobotSetup/Odom

The navigation stack uses tf to determine the robot's location in the world and relate sensor data to a static map. However, tf does not provide any information about the velocity of the robot. Because of this, the navigation stack requires that any odometry source publish both a transform and a nav_msgs/Odometry message over ROS that contains velocity information.

Calculation examples using rotary encoders on wheels

Assumptions:

- The robot has two wheels, each equipped with a rotary encoder (other wheels may be used for balance)

- The wheels are parallel to each other and equidistant to the centre of the robot

A sketch of these assumptions can is presented in figure 1. The gray area is the robot base, whereas the black rectangles provide a top view of the wheels.

|

| Figure 1 Topview of the robot |

For each ‘tick’ of the encoder, a binary value is increased by one. The new position and orientation of the robot depend on the increase in ticks since the last known position/orientation,

Where tick is the increase in delta ticks, tick' is the current number of ticks and tick is the previous number of ticks.

One ‘tick’ equals a rotation in degrees, depending on the encoder resolution. To determine the distance traveled by one wheel (D), one has to multiply the circumference of that wheel (C) with the increase in ticks and the resolution of the encoder (R) and divide it by 360 degrees, equation 2.

|

| figure 2 |

By calculating the distance each of the two wheels have traveled and using the assumptions above, the distance traveled by the centre of the robot is easily calculated, equation 3.

Where Dc is the centre of the robot, Dl and Dr are respectively the left and right wheel of the robot. Figure 2 gives an example.

Equations 4, 5 and 6 are used to respectively calculate the new x-position, y-position and orientation (phi), by adding the previous position/orientation to the change in position/orientation.

The change in x and y direction is calculated by multiplying the distance traveled by the base of the robot with respectively the cosine or sine of the orientation of the robot.

The change in orientation is calculated by the difference in distance traveled by each wheel, divided by the distance between the wheels.

Restrictions

Restrictions

Odometry is inaccurate at medium and long range distances.

When using wheel encoders, inaccuracy occurs when wheels drift (spinning while not moving forward) or when driving over bumps on the floor.

Solution

Use a gyroscope to measure the angular acceleration. By integrating the angular velocity or angle can be calculated. In this way, the gyroscope can be used to check for any deviations on the angle of the robot.

------------------------------------------------------------------------------------------------

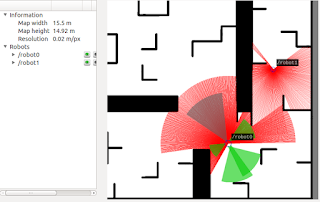

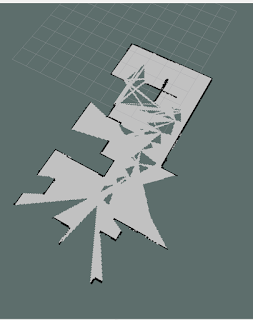

We successfully simulated the Adhoc_communication node as well as the Map Merging node, the result which can be seen in the animated gif at the bottom.

We successfully simulated the Adhoc_communication node as well as the Map Merging node, the result which can be seen in the animated gif at the bottom.